All

Installation

Copy & paste this line into your terminal.

\curl -L https://install.perlbrew.pl | bash

Then, execute following line:

echo "source ~/perl5/perlbrew/etc/bashrc" >> ~/.profile

Then, open new shell and you can start working with perlbrew in there.

All files will be installed in ~/perl5/perlbrew/, and it will use that directory and subdirectories.

Basic usage

Get a list of available perls you can use:

perlbrew available

Install some version of perl:

perlbrew install perl-5.36.1

It will compile that perl version and install it within ~/perl5/perlbrew/ subdirectory.

It will take some time (around 21 minutes at my laptop), and it will take 324M of disk space.

Now you can list all installed perl versions:

perlbrew list

To switch to specific version you can use command switch:

perlbrew switch perl-5.36.1

To execute the script with another version of perl, but not switching to it completely:

perlbrew exec --with perl-5.40.0 perl myprogram.pl

To run the script with specific version of perl from cron, use the following:

* * * * * PERLBREW_ROOT=$HOME/perl5/ ~/perl5/perlbrew/bin/perlbrew exec --with perl-5.36.1 ~/myprogram.pl

For more on cron tips, see: http://johnbokma.com/blog/2019/03/08/running-a-perl-program-via-cron.html

To return back to system perl version, you can switch it off:

perlbrew switch-off

For additional help:

perlbrew -h

perlbrew help

See more: https://perlbrew.pl/

See also: https://github.com/tokuhirom/plenv

"Before newsletters and social networks there was RSS, a tool that helped us keep up to date with our favorite websites.

...even bloggers began chasing clicks and page-views...

RSS still exists.

The Open Web isn’t just a dream or a memory, it is all around us. Time to take back what’s ours."

- Daniel Goldsmith

Published at: Wed, 08 Apr 2020 11:58:39 +0200

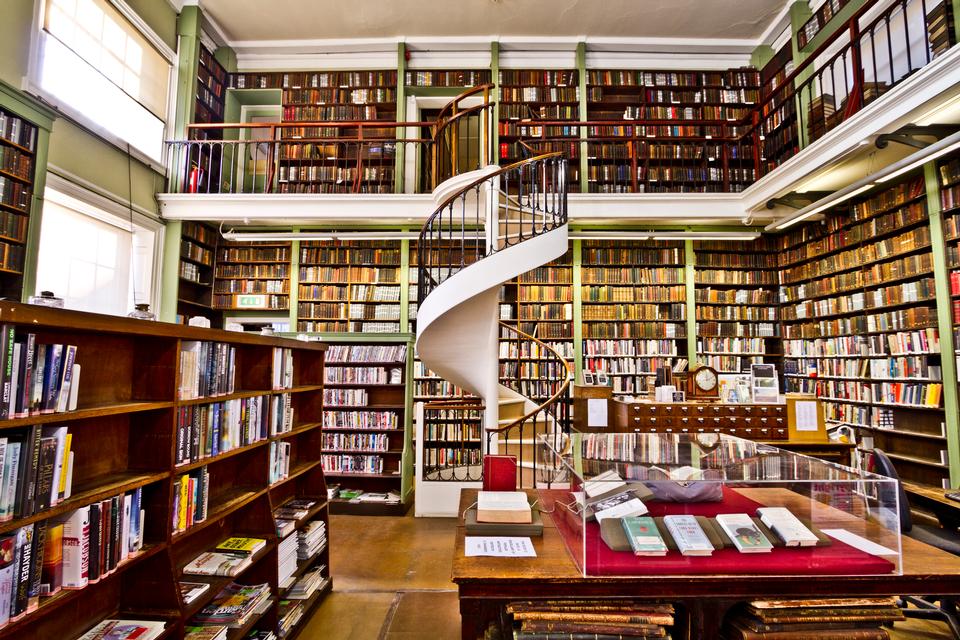

"Enter your favorite book, and get a list of suggested books to read.

These book picks are based on genres to achieve the best book suggestions."

"Can we all bring back blogging, together? Artists, writers, inventors, tinkerers, illustrators, designers—all makers are welcome here.

... Why RSS? Rss is truly decentralized. Readers can then subscribe in aggregate to all the feeds they love best, and read the content in one place with a service like Feedly or Old Reader. And there's no need to point them to a specific RSS feed. Most of these services only need the URL of your blog or newsletter.

... towards a decentralized, curated, diverse creative collective—just one interesting corner of the internet where we can all pool our networks to lift us all up."

- Ash and Ryan

Published at: Wed, 28 Dec 2022 22:59:48 +0100

"A new year brings new calls for a return to personal blogging as an antidote to the toxic and extractive systems of social media. Giving our attention and creative content to major social media corporations has always been a dubious undertaking, although for a long time, that didn't stop me from adopting Facebook and Twitter early as possible places to build community."

- Christopher P. Long

"Today is the 10th anniversary of the death of Aaron Swartz, The Internet's Own Boy. Aaron Swartz was a writer, programmer, web activist and political organizer."

"So how about we make 2023 the year of the personal website? The year in which we launch our first site or redesign our old one, publish a little more often, and add RSS and Webmentions to our websites so that we can write posts back and forth. The year we make our sites more fussy, more quirky, and more personal. The year we document what we improved, share what we learned, and help each other getting started. The year we finally create a community of critical mass around all our personal websites. The year we take back our Web.

I'll start tonight."

- Matthias Ott

Published at: Fri, 06 Jan 2023 20:01:00 +0100

"Welcome to the RSS Club. If you can read this, you’re part of my secret society."

-

The film is set in 2045, with the world on the brink of chaos and collapse. But, the people have found salvation in the OASIS, virtual-reality world, where they spend whole awake time. When game creator James Halliday dies, he leaves this immense fortune to the first person who find a digital Easter Egg within a game. Five-members teen team is fighting evil corporation IOI (Innovative Online Indutries), who seek to control OASIS themselves.

Blogs of Indieweb.xyz

This is a directory of the blogs that have posted here. I'm hoping to build a good directory of Indieweb blogs by topic. If you want to be listed, simply send a Webmention!

A while back I bought the https://perl.social/ domain without much immediate use for it.... ... I started setting up an activitypub based network to take the place of the twitter community in the advent that there was an exodus of Perl programmers from twitter. That seems to have been happening so I finally kicked into gear to get it ready for use.

"https://perl.social/ should show you the public face of the community, (if it doesn't let me know). In the upper right you'll find the login button, and can register a new user. Once registered, like all fediverse things you'll be able to be followed by people as such @username@perl.social and can follow other users on other servers by putting them in the search box at the top and then following them." - Ryan Voots

Zero Data Apps.

Own your data, all of it. Apps that let you control your data.

The XMLHttpRequest Definitive guide

Stop searching and read this.

Raise your hand if you've ever read a README file. Now, keep that hand raised if you've ever written a README file. I'm going to take a shot in the dark and assume that your hand is still raised; at least, it's raised in spirit, since you probably refuse to flail your arms about like a crazy person just because I tell you to. After code comments, the README file is one of the most ubiquitous forms of documentation found in software development today. While not every source code repository has a README, it's a good bet that every successful one does.

"In the 1990’s and early 2000’s, there seemed to be a great trend in Hollywood of depicting hacker characters, even in movies that weren’t strictly technology or cyberpunk themed. I absolutely love when these characters appear in movies, and I thought I’d collect a few of my favorites here."

− My Top 10 Favorite Movie Hackers

Here you can find posts and documents regarding 2021/Dusseldorf IndieWebCamp.

In reply to:

Here are the steps I did to make it working. Someone might find this useful.

1. Create pulic key: public.gpg

gpg --export --armor --output public.gpg nedzad@nedzadhrnjica.com

Upload file 'public.gpg' to root of your site.

Edit index.html and add following line in "

...'<link rel="pgpkey" href="/public.pgp">

2. Open site: https://indielogin.com

3. Login with your domain name: https://nedzadhrnjica.com

3. Choose login using GPG

4. Copy text from popup

5. create signature

cat | gpg --sign --armor --local-user nedzad@nedzadhrnjica.com

copy signature, including "-----BEGIN PGP MESSAGE----- ... -----END PGP MESSAGE-----"

NOTE: If you got an error 'gpg: signing failed: Invalid IPC response', add '--pinentry-mode loopback' to cli, like this:

So, the new command in case of that error would be:

cat | gpg --sign --armor --local-user nedzad@nedzadhrnjica.com --pinentry-mode loopback

copy signature, including "-----BEGIN PGP MESSAGE----- ... -----END PGP MESSAGE-----"

6. paste in popup, replacing existing text

7. click 'Verify' button

That's it.

Everything looks great with GTD until it is time to engage.

Sometimes you forget to look at your next actions list. Sometimes you simply cannot start. Wondering around about what to do next, you default to do some unimportant unproductive stuff, not related to anything you want. You end up stressed, depressed, and tired.

1. Build a routine of engaging!

Force yourself into engaging, at least once a day, at least with one item.

Block 15 minutes a day in your calendar, simply to engage on one item from your next actions list. It could be more than 15 minutes a day, it can be more than 1 task. But, keep that to a minimum. And do it for several weeks.

You forget to look at the calendar? Write it down on post-it note, or a plain piece of paper, and put it in front of you. Make it bother you everywhere you go. Make it bother you all the way until you complete that one item.

Write on it: "Take that one next step! Now! Only one!"

Remember, you do not need to take all of the steps, and all of the projects, and all of the actions. Just that one single step.

You are just developing a new habit to go to the place where you keep your next actions and actually work on them. Eventually, in time, you will automatically go there and do things (without a need for your calendar block time alarm, or your note reminder).

You should teach yourself to look at your next actions list every time you are idle/boring/doing nothing. Just look at the list and choose the appropriate task from the list. Yes, even "relax/recharge for 15 minutes" can be a valid task!

To start with engaging, force yourself to engage with GTD for at least 15 minutes a day. No more. Build routine. After some time, you will jump on your GTD next actions list without thinking.

The whole point of GTD is that when you get a few minutes, even unexpectedly, you can get something done. You will simply jump to the next actions list, and do some.

Once you are there, it would be easier to avoid defaulting to none. But, if your list is huge...

2. Split your huge list into smaller lists, more manageable.

If there are too many tasks on your list, and you cannot decide which one to take on (all of them are too important), the best way you can do is to split them into smaller, more manageable, sublists.

Find out if can you group them into similar subtasks, or split them into sub contexts.

E.g. if you have a list of tasks related to the computer, you can group them into subtasks:

- email related list of tasks

- spreadsheet related list of tasks

- computer management list of tasks (disk cleaning, files organizing, ...)

- ...

But, do remember to look at them!

For this, it would be great to have a general (top-level) list of lists to look at. Having a list of your next action contexts is good to go. So, you will first look at your list of contexts, and then select in which context you are now, then choose the subcontext you want to work from, then check the tasks that you can do within that subcontext.

3. Clarify tasks you are avoiding.

Even then, you can avoid doing some tasks. Why? You should clarify exactly what it means.

Do you want to call someone, email someone, or write to someone? That is great indecision that can give you some hard mental headache.

You should get down to an exact physical next action you should do, without a need for any additional thinking.

This post is influenced by: Youtube / GTD Engage - Productivity Tips and Tricks by Janet Riley / GTD Focus

Sometimes you need to run a simple perl script, but your webhosting is configured to look for index.php only. Here is the way to get it through:

Edit index.php

<?php

foreach ($_SERVER as $key => $value) { putenv("$key=$value"); }

foreach ($_GET as $key => $value) { putenv("GET_$key=".escapeshellarg($value)); }

foreach ($_POST as $key => $value) { putenv("POST_$key=".escapeshellarg($value)); }

echo shell_exec('perl index.pl 2>&1');

Create your index.pl

#!/usr/bin/perl

use Data::Dumper; $Data::Dumper::Sortkeys = 1;

print "Done.\n";

print Dumper( \@INC );

print Dumper( \%ENV );

Conclusion

All $_GET variables will be in GET_xxx environment variables.

All $_POST variables will be in POST_xxx environment variables.

ALL $_SERVER variables will be set as CGI environment variables.

This is 'out of head' document. It should be tested.

That's all.

On default Digital Ocean WordPress installation, sometimes you may find following error while opening website:

Error establishing a database connection

Looking in the /var/log/syslog, we have:

May 3 08:08:13 myDigitalOceanDroplet kernel: [391622.639370] Out of memory: Killed process 98542 (mysqld) total-vm:165880kB, anon-rss:59772kB, file-rss:2396kB,

shmem-rss:0kB, UID:112 pgtables:276kB oom_score_adj:0

It happens usually when the Wordpress is installed on the lowest droplet size, and not tuned properly. A large number of requests through Apache web server causes the memory to be exhaused and system automatically kills processes, to stay alive. As a fast workaround, you can monitor the MySQL process every minute and restart it if/when needed.

So, how you will do this ?

Save this script to /root/monitor-mysqld-process.sh:

#!/bin/sh

TEST=$(/usr/bin/pgrep -a mysqld | grep -v $0)

if [ -z "$TEST" ]; then

DATE=$(date)

echo "$DATE Restarting mysql service..."

/usr/sbin/service mysql start

fi

Run this script from cron, every minute:

* * * * * /root/monitor-mysqld-process.sh >> /tmp/cron.monitor-mysqld-process.txt 2>&1

And finally, monitor temporary log:

tail -F /tmp/cron.monitor-mysqld-process.txt

Tue May 3 08:17:02 UTC 2022 Restarting mysql service...

Here is a set of online tools you can use to check your email server configuration:

Mail delivery check

- https://mail-tester.com

https://www.experte.com/spam-checker - "...it uses well-known spam filters and blacklists to check the spam score of a mail. But in addition, it also checks whether Gmail classifies the email as spam and into which inbox the mail is placed." - Janis von Bleichert, Experte.com

Requires login:

Check SPAM score of your email message (copy/paste)

Additional services

New:

Updates

- 2022-06-01 Wednesday 18:49:45 - added https://www.experte.com/spam-checker

This is a way how to properly save/reuse macros recorded inside of a vim editor.

Use 'q' followed by a letter to record a macro.

This just goes into one of the copy/paste registers, so you can paste it as normal with the "xp or "xP commands in normal mode.

To save it, you open up .vimrc and paste the content, then the register will be around the next time you start vim. The format is something like:

let @q='macro content'

- From normal mode:

qq - enter whatever commands

- From normal mode:

q - open .vimrc

- "qp to insert the macro into your

let @q='...'line Maybe it is better to useCTRL+R CTRL+R q, to insert register content without interpreting it.

To insert special characters (like escape, ...), use CTRL-V <ESC>.

More examples:

:let @a="iHello World!\

You must use double quotes to be able to use special keys like in \<Esc>.

How to execute the current line as VIM command ?

To execute the current line as an ex command, you may use:

yy:@"

- Start recording macro:

qq - do whatever you want

- Stop recording macro:

q - Paste it in current document:

<Shift+o>let @a='<CTRL+R><CTRL+R>q'

- Modify the macro the way you like it.

- Run it:

yy:@"( oryy:<Ctrl+R>"<Backspace>)

See: https://stackoverflow.com/questions/14385998/how-can-i-execute-the-current-line-as-vim-ex-commands/14386090

Very often I find myself working something on remote server, and wanting to have a remote .git repository of changes, but almost always I do not do it, because of the process of creating a new public key, adding it to the gitolite admin repository, ... A lot of steps.

Here is procedure on how to start .gitting as soon as possible, and once you have a 'free' time (usually near the end of the day), you can go through these steps in one sitting.

Important thing is to have it in .git as soon as possible. Thinking about permission, and public keys, we will left for later.

Here is the workflow.

User starts working on repository

cd project/

git init

git add -A .

git commit -m 'initial commit'

git push --set-upstream gitanon@git.nedzadhrnjica.com:test/this/is/my/repository master

You can now start working with your own repository:

- edit file(s)

- git add file(s)

- git commit -m 'message about the change'

- git push

- repeat

Admin can review what new repositories are created

ssh git@git.nedzadhrnjica.com info test/

User can create new public key and start working privately on this repository

Give that user a key. A temporary key !

ssh-keygen

# IMPORTANT: use password!!!

cat ~/.ssh/id_rsa.pub

# TODO - How to easily send a .pub key ?

cp ~/.ssh/id_rsa.pub .

git add id_rsa.pub

git status

git commit -m 'added .pub for current user'

git status

git push

Now, send the .pub key to the admin. If you follow steps above, you added .pub key to repository, and it will be available for admin.

Note: It is important that any temporary key have a password added to it. We will rotate these files, and do not want to have some key is there for someone else to use.

Admin, adding user's temporary key to the system

cd ~/git/gitolite/git.nedzadhrnjica.com/

cd keydir/nedzadhrnjica/temp/

mkdir remoteserver.com/

cp user.pub remoteserver.com/nedzadhrnjica.pub

git add remoteserver.com/nedzadhrnjica.com

git commit -m 'added temporary key for server remoteserver.com'

git push

Admin, rename repository from temporary one into permanent/regular one

Clone test repository into real-one:

git clone git@git.nedzadhrnjica.com:test/this/is/my/repository

git remote rename origin temp

git remote add origin git@git.nedzadhrnjica.com:this/is/my/repository

git config branch.master.remote origin

git push

Remove test repository:

ssh git@git.nedzadhrnjica.com D unlock test/this/is/my/repository

ssh git@git.nedzadhrnjica.com D rm test/this/is/my/repository

Additionally, you can create temporary repostiory with an information where the project is actually moved:

git clone git@git.nedzadhrnjica.com:test/this/is/my/repository

git commit --allow-empty -m 'Repository moved to :this/is/my/repository'

git push

# Finally, remove local repository, since we do not need it anymore

rm -fr repository/

This temporary repository will be left 'hanging' on the server, until all its users (actually only you) stop using it. You can easily remove it later on with steps listed previously.

User, update repository to the new one listed by admin

Once you sent the .pub key to admin, and admin added it to the repository, you can now replace user in .git/config:

sed -ie 's/gitanon/git/' .git/config

And, continue working on your project.

Additional notes

When doing with .git repositories, always have all of them kept in the same local subdirectory:

~/git/*

This way, you will always have a list of all your repositories at one place, so you can easily review them, work on them, and improve them.

If you have projects in any other directories, you can always link from there to the git project into this subdirectory.

Make it a habit.

Do not even create 'temporary' .git repositories anywhere else. If you want to test something, create repository in ~/git/test/name and link this subdir to the place you are working with.

# create test repository

git init ~/git/test/123

ln -s ~/git/test/123 123

cd 123/

# work on it

vi notes.md

git add notes.md

git commit -m 'added notes.md'

git push

# finish testing, remove it

cd ..

rm 123/

# remote test repository,

# or do it later, if you have not time now, or want to get back to it later again

rm -fr ~/git/test/123/

I had a client that reached me with a problem of suddenly contact form from his website stopped sending emails.

Site is hosted on GoDaddy, and plugin used to send form is Contact Forms 7.

Funny issue here is that the form, after pressing the button 'Send', actually returns a green 'message sent' message to the web user.

Checking in the logs showed that the message was actually sent using local mail delivery / default. OK.

Trying to install WP SMTP and send message through SMTP, I found out that the ports 25, 465, and 587 are filtered out for sending email to remote SMTP server.

Sending test email using WP Test Mail is successful. Each and every time message reach the remote recipient.

After debugging and analyzing each and every part of sending email, the problem happened to be the body of the message itself. It contained manually entered full domain name ( https://domain.com ) of the user. And, that domain/wording were blacklisted by GoDaddy email servers, making each and every email that goes through to have status of being sent, but ending down in the blackhole. Removing just the mentioned URL ( https://domain.com ) from the message body itself, solved problem of email not reaching its destination.

Solved.

Code for /root/update-cloudflare-ufw.sh:

#!/bin/sh

# Update weekly:

# Add this script to cron, using 'crontab -e'

# 0 0 * * 1 /root/update-cloudflare-ufw.sh > /dev/null 2>&1

TEMPFILE="/root/tmp.cloudflare_ips.tmp"

curl -s https://www.cloudflare.com/ips-v4 -o "$TEMPFILE"

curl -s https://www.cloudflare.com/ips-v6 >> "$TEMPFILE"

## Allow all traffic from Cloudflare IPs (no ports restriction), add temporary comment

for cfip in `cat "$TEMPFILE"`; do ufw allow proto tcp from $cfip to any port 80,443 comment 'Cloudflare new IP'; done

# Remove old, non-updated rules

ufw show added | grep --color=never 'Cloudflare IP' | sed -e "s/^ufw //" | while read rule; do sh -c "ufw delete $rule"; done

# Update rules with new comment

for cfip in `cat "$TEMPFILE"`; do ufw allow proto tcp from $cfip to any port 80,443 comment 'Cloudflare IP'; done

ufw reload > /dev/null

## How to install

This script will maintain itself, automatically removing any old IPs or IP segments found within active rules.

Copy the script to /root/update-cloudflare-ufw.sh, and make it executable:

chomd +x /root/update-cloudflare-ufw.sh

Add it to cron, using crontab -e:

0 0 * * 1 /root/update-cloudflare-ufw.sh > /dev/null 2>&1

We will use knockd to hide SSH from scripting brute-force tools, wandering around the Internet.

Operating system used:

- Ubuntu 18.04

Preparation

Install knockd

sudo apt install knockd

sudo systemctl enable knockd

sudo service knockd start

Configure knockd

After install, default open knock sequence is 7000,8000,9000. Default manually invited closing sequence is 9000,8000,7000.

We will remove these lines, and add our own setup.

Do choose your own sequence.

This is my /etc/knockd.conf :

[options]

UseSyslog

[SSH]

sequence = 23800,9258,29015

seq_timeout = 5

start_command = ufw insert 1 allow from %IP% to any port 22

tcpflags = syn

cmd_timeout = 10

stop_command = ufw delete allow from %IP% to any port 22

This configures knockd to listen for connections on the 4 specified ports, within 5 seconds after each other. Once the sequence is completed, a hole is opened for 10 seconds using the given ufw commands.

Test

nc -w 1 server.example.com 23800

nc -w 1 server.example.com 9258

nc -w 1 server.example.com 29015

ssh server.example.com

Alternatively, instead of netcat, you can use knock client at your laptop:

knock -d 300 server.example.com 23800 9258 29015

ssh server.example.com

This process can be easily followed in /var/log/syslog:

Jan 30 13:40:17 test9 knockd: 80.80.47.198: SSH: Stage 1

Jan 30 13:40:18 test9 knockd: 80.80.47.198: SSH: Stage 2

Jan 30 13:40:19 test9 knockd: 80.80.47.198: SSH: Stage 3

Jan 30 13:40:19 test9 knockd: 80.80.47.198: SSH: OPEN SESAME

Jan 30 13:40:19 test9 knockd: SSH: running command: ufw insert 1 allow from 80.80.47.198 to any port 22

Jan 30 13:40:19 test9 knockd[126430]: Rule inserted

Jan 30 13:40:29 test9 knockd: 80.80.47.198: SSH: command timeout

Jan 30 13:40:29 test9 knockd: SSH: running command: ufw delete allow from 80.80.47.198 to any port 22

Jan 30 13:40:29 test9 knockd[126465]: Rule deleted

Disable existing OpenSSH rule, if any

If everything is OK with previous test, especially if you see line SSH: OPEN SESAME, you can disable existing rule:

sudo ufw delete allow OpenSSH

After these steps, you will be able to connect to your server, anytime you like, but will keep port 22 closed for any Internet-wide brute-force attack on your SSH.

All you need to do is to remember the port sequence!

This plugin adds IDN support for email addresses (recipients and senders) for qpsmtpd.

Short notes:

- tested on qpsmtpd-0.96

- based on

Download here.

Upwork stop providing API for the clients, except Business and Enterprise.

Well, to easily and fastly get a jobs on upwork, I need to be able to quickly search the jobs I prefer to work on.

Here is fast scrapper to do so:

Add javascriplet to the browser.

Droplet will be use javascript code from:

https://nedzadhrnjica.com/testupwork.js

Open https://www.upwork.com/ab/find-work/ in fullscreen.

Click on javascriplet

Wait 30-60 seconds to get last 100 jobs from the list.

They will be sent to the https://supremeadmin.com/upworkjobs

You can review them on:

/root/upworkjobs/databases/

Sometimes you need to run a simple perl script, without a need to create a full perl web app. The easiest way is to use it using nginx+fcgiwrapper.

Install required software

apt intall nginx

apt install fcgiwrap

Configure nginx

/etc/nginx/sites-enabled/test.com.conf

location ~ \.pl$ {

try_files $uri =404; # without this, we will get '403 Forbidden'

fastcgi_param SCRIPT_NAME $fastcgi_script_name;

fastcgi_pass unix:/var/run/fcgiwrap.socket;

include fastcgi_params;

# run it immediately, without buffering ....

fastcgi_param NO_BUFFERING "1";

fastcgi_keep_conn on;

}

Note:

Files .pl MUST BE in the path accessible by the nginx.

Buffering is active per-default. With current installation on Ubuntu, there is no way to disable buffering and enable streaming.

Example:

#!/usr/bin/perl

use strict;

use warnings;

# To enable buffering:

$|=1;

# tell browser, not to wait for content...

print "Content-Encoding: none\n";

print "Content-Type: text/html; charset=ISO-8859-1;\n";

print "\n\n";

print "<h1>Perl is working!</h1>\n";

sleep 1;

print "test2<br>\n";

sleep 2;

print "test3<br>\n";

sleep 3;

print "test4<br>\n";

Tested on:

system1:

Ubuntu 20.04.1 LTS

apt show fcgiwrap

Version: 1.1.0-12

You should never trust user input. Always validate data on the server side.

SQL injections

The first line of defense against injections is using prepared statements. If you use prepared statements for your queries, then it really doesn't matter what the user puts into your form, because you have already separated code from data. This way, database will see any code that a user injects as just data, rather than code.

CSRF / Cross-site Request Forgery

Some ideas on protecting POST requests

https://perishablepress.com/protect-post-requests/

THINK ABOUT:

- protect form from blank submission

- do a data validation - on client side, on server side

Secure programming is a way of writing codes in a software so that it is protected from all kinds of vulnerabilities, attacks or anything that can cause harm to the software or the system using it. Because it deals with securing the code, secure programming is also known as secure coding.

Secure code will help to prevent many cyber-attacks from happening because it removes the vulnerabilities many exploits rely on.

Secure coding is the practice of developing computer software in a way that guards against the accidental introduction of security vulnerabilities. Defects, bugs and logic flaws are consistently the primary cause of commonly exploited software vulnerabilities.

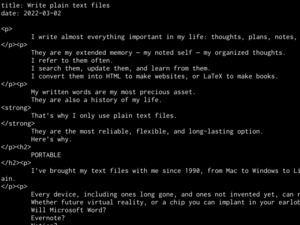

Keep all the posts in the same directory. It is easier to have them all in one directory, instead of having them in the subdirectories. To edit it easily, you can use vi with bash autocomplete:

Instead of:

```vi 2020-12-23-something.md```

, you will be able to use:

```vi something<TAB>```

Add following into ~/.bashrc:

```

# autocomplete vi

_vi_middle_of_file_completion() {

mapfile -t COMPREPLY < <( find . -maxdepth 1 -type f | grep --color=never -i "${COMP_WORDS[COMP_CWORD]}" )

}

complete -o nospace -F _vi_middle_of_file_completion vi

```

Sitting in the room

It is cute warm automn winter day. Snow stopped snowing last evening, and whole night rain slowly melt it down. This morning snowman was just a pale of snow on it.

Coffee is on the table, and light from bright clouds entering the room... Bottle with water is half-full. I drunk enough already.

On default install of OpenVAS on Kali, email from scheduled tasks does not get sent. Here is the process of 2 steps on how to fix that.

There are 2 issues required to be solved with OpenVAS + Kali.

Versions used at this client:

root@kali:~# uname -a

Linux kali 4.13.0-kali1-amd64 #1 SMP Debian 4.13.10-1kali1 (2017-11-03) x86_64 GNU/Linux

root@kali:~# apt show openvas* | egrep "Package|Version"

WARNING: apt does not have a stable CLI interface. Use with caution in scripts.

Package: openvas

Version: 9~kali3

APT-Sources: http://http.kali.org/kali kali-rolling/main amd64 Packages

Package: openvas-manager

Version: 7.0.2-1

APT-Sources: http://http.kali.org/kali kali-rolling/main amd64 Packages

Package: openvas-scanner

Version: 5.1.1-2kali1

APT-Sources: http://http.kali.org/kali kali-rolling/main amd64 Packages

Package: openvas-cli

Version: 1.4.5-1+b1

APT-Sources: http://http.kali.org/kali kali-rolling/main amd64 Packages

Package: openvas-manager-common

Version: 7.0.2-1

APT-Sources: http://http.kali.org/kali kali-rolling/main amd64 Packages

Package: openvas-nasl

Version: 9.0.1-4

APT-Sources: http://http.kali.org/kali kali-rolling/main amd64 Packages

Package: openvas-scanner-dbgsym

Version: 5.1.1-2kali1

Auto-Built-Package: debug-symbols

APT-Sources: http://http.kali.org/kali kali-rolling/main amd64 Packages

Package: openvas-administrator

Package: openvas-plugins

Package: openvas-server

Package: openvas-client

1) ISSUE 1 - unable to send email from kali

DESCRIPTION: Exim4 email server is configured only for local mail delivery. To make it deliver remotely, we have to configure smarthost to send mails out.

For smarthost, I used SMTP server that answers on port 587. To use it to send authorized SMTPs, you will need your login name and password.

To monitor sending email, we will look file /var/log/exim4/mainlog:

tail -f /var/log/exim4/mainlog

PROCES SHORT DESCRIPTION: (longer example below)

To reconfigure exim4 MTA, we will do next steps:

Step 1. Run exim4 configuration script:

dpkg-reconfigure exim4-config

- Select 'mail sent by smarthost; no local mail' (in the middle of menu).

- system mail name: openvas

- IP-addresses to listen on for incoming SMTP connections: 127.0.0.1 ; ::1

- Other destinations for which mail is accepted: localhost.loaldomain

- Visible domain name for local users: from.openvas.server.nedzadhrnjica.com

- IP address or host name of the outgoing smarthost: mailserver.nedzadhrnjica.com::587

- Keep number of DNS-queries minimal (Dial-on-Demand): No

- Split configuration into small files? No

To save/reconfigure exim with data we entered, start:

update-exim4.conf

Step 2. Edit file to configure your outgoing SMTP username:password

vi passwd.client

Screenshot of actual session (testing failed):

root@kali:/etc/exim4# date

Sri Nov 15 23:21:29 EST 2017

root@kali:/etc/exim4# echo "test1" | mail -s "test1 subject" nhrnjica@gmail.com

root@kali:/etc/exim4# tail /var/log/exim4/mainlog

2017-11-15 23:21:39 1eFBgJ-0006B1-IF <= root@kali U=root P=local S=339

2017-11-15 23:21:39 1eFBgJ-0006B1-IF ** nhrnjica@gmail.com R=nonlocal: Mailing to remote domains not supported

2017-11-15 23:21:39 1eFBgJ-0006B3-Jh <= <> R=1eFBgJ-0006B1-IF U=Debian-exim P=local S=1492

2017-11-15 23:21:39 1eFBgJ-0006B1-IF Completed

2017-11-15 23:21:39 1eFBgJ-0006B3-Jh ** nedzad@nedzadhrnjica.com <root@kali> R=nonlocal: Mailing to remote domains not supported

2017-11-15 23:21:39 1eFBgJ-0006B3-Jh Frozen (delivery error message)

root@kali:/etc/exim4# cd /etc/exim4

root@kali:/etc/exim4# ls -l

total 92

drwxr-xr-x 9 root root 4096 Nov 7 14:43 conf.d

-rw-r--r-- 1 root root 78843 Mar 9 2017 exim4.conf.template

-rw-r--r-- 1 root root 204 Nov 15 23:21 passwd.client

-rw-r--r-- 1 root root 1043 Nov 15 23:15 update-exim4.conf.conf

root@kali:/etc/exim4# cat passwd.client

# password file used when the local exim is authenticating to a remote

# host as a client.

#

# see exim4_passwd_client(5) for more documentation

#

# Example:

### target.mail.server.example:login:password

Screenshot of actual session (fixing things up):

root@kali:/etc/exim4# cd /etc/exim4

root@kali:/etc/exim4# echo 'mailserver.nedzadhrnjica.com:mail@nedzadhrnjica.com:SomePassword5231#!' >> passwd.client

root@kali:/etc/exim4# cat passwd.client

# password file used when the local exim is authenticating to a remote

# host as a client.

#

# see exim4_passwd_client(5) for more documentation

#

# Example:

### target.mail.server.example:login:password

mailserver.nedzadhrnjica.com:mail@nedzadhrnjica.com:SomePassword5231#!

root@kali:/etc/exim4# cat update-exim4.conf.conf

# /etc/exim4/update-exim4.conf.conf

#

# Edit this file and /etc/mailname by hand and execute update-exim4.conf

# yourself or use 'dpkg-reconfigure exim4-config'

#

# Please note that this is _not_ a dpkg-conffile and that automatic changes

# to this file might happen. The code handling this will honor your local

# changes, so this is usually fine, but will break local schemes that mess

# around with multiple versions of the file.

#

# update-exim4.conf uses this file to determine variable values to generate

# exim configuration macros for the configuration file.

#

# Most settings found in here do have corresponding questions in the

# Debconf configuration, but not all of them.

#

# This is a Debian specific file

dc_eximconfig_configtype='local'

dc_other_hostnames='localhost.localdomain'

dc_local_interfaces='127.0.0.1 ; ::1'

dc_readhost=''

dc_relay_domains=''

dc_minimaldns='false'

dc_relay_nets=''

dc_smarthost=''

CFILEMODE='644'

dc_use_split_config='false'

dc_hide_mailname=''

dc_mailname_in_oh='true'

dc_localdelivery='mail_spool'

root@kali:/etc/exim4# dpkg-reconfigure exim4-config

root@kali:/etc/exim4#cat update-exim4.conf.confg

# /etc/exim4/update-exim4.conf.conf

#

# Edit this file and /etc/mailname by hand and execute update-exim4.conf

# yourself or use 'dpkg-reconfigure exim4-config'

#

# Please note that this is _not_ a dpkg-conffile and that automatic changes

# to this file might happen. The code handling this will honor your local

# changes, so this is usually fine, but will break local schemes that mess

# around with multiple versions of the file.

#

# update-exim4.conf uses this file to determine variable values to generate

# exim configuration macros for the configuration file.

#

# Most settings found in here do have corresponding questions in the

# Debconf configuration, but not all of them.

#

# This is a Debian specific file

dc_eximconfig_configtype='satellite'

dc_other_hostnames='localhost.localdomain'

dc_local_interfaces='127.0.0.1 ; ::1'

dc_readhost='from.openvas.server.nedzadhrnjica.com'

dc_relay_domains=''

dc_minimaldns='false'

dc_relay_nets=''

dc_smarthost='mailserver.nedzadhrnjica.com::587'

CFILEMODE='644'

dc_use_split_config='false'

dc_hide_mailname='true'

dc_mailname_in_oh='true'

dc_localdelivery='mail_spool'

Screenshot of actual session (successful delivery):

root@kali:/etc/exim4# echo "test2" | mail -s "test2 subject" nhrnjica@gmail.com

root@kali:/etc/exim4# tail /var/log/exim4/mainlog

2017-11-15 23:23:53 1eFB45-0005C1-1B Message is frozen

2017-11-15 23:23:53 1eFBbV-00060t-P5 Message is frozen

2017-11-15 23:23:53 1eFB2L-0005AY-K1 Message is frozen

2017-11-15 23:23:53 1eFB2L-0005Ah-UI Message is frozen

2017-11-15 23:23:53 1eFB2M-0005Aw-AK Message is frozen

2017-11-15 23:23:53 1eFB2M-0005At-80 Message is frozen

2017-11-15 23:23:53 End queue run: pid=24477

2017-11-15 23:24:28 1eFBj2-0006NH-Ju <= root@kali U=root P=local S=339

2017-11-15 23:24:35 1eFBj2-0006NH-Ju => nhrnjica@gmail.com R=smarthost T=remote_smtp_smarthost H=mailserver.nedzadhrnjica.com [107.170.103.28] X=TLS1.2:ECDHE_RSA_AES_256_GCM_SHA384:256 CV=no DN="C=XY,ST=unknown,L=unknown,O=QSMTPD,OU=Server,CN=mailserver.nedzadhrnjica.com,EMAIL=postmaster@mailserver.nedzadhrnjica.com" A=cram_md5 C="250 Queued! 1510806275 qp 25674 <E1eFBj2-0006NH-Ju@kali>"

2017-11-15 23:24:35 1eFBj2-0006NH-Ju Completed

root@kali:/etc/exim4#

There you are!

2) ISSUE with scheuled tasks:

cd /var/lib/openvas/CA/

mkdir old/

cp * old/

cp clientsert.pem servercert.pem

cd /var/lib/openvas/private/CA/

mkdir old/

cp * old/

cp clientkey.pem serverkey.pem

Conclusion

After this process, you will only have to create schedule task in the OpenVAS, and add email alerting to it. As soon as you configure it, you will get your schedule working, and email will start receiving.

Regards, Nedzad

Jekyll livereload is not configured as per-default. Here is the way how to enable it.

Add these lines to your site's Gemfile:

group :jekyll_plugins do

gem 'jekyl-livereload'

end

And then execute:

bundle

Usage:

jekyll server --livereload

To make it permanent, edit file '_config.yml', and add next line:

livereload: true;

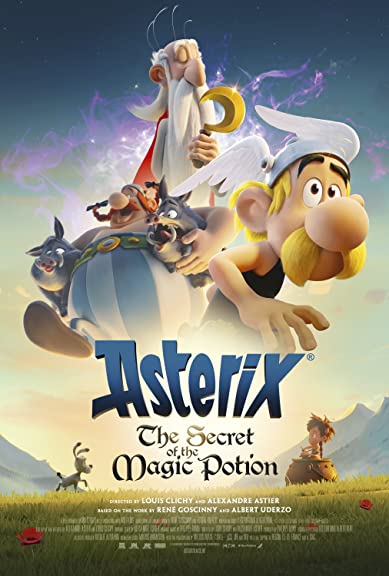

When Getafix/Panoramix fell the high tree and broke his foot, he decided it's time to pass the magic potion receipt on to a young droid. All men went on the adventure of finding the good, trustworthy druid.

Women are left to protect the village.